Imagine you’re a passionate chef who loves hosting dinner parties. If you could set up your kitchen, pantry, and refrigerator from scratch, you’d organize everything in a way that would make it as easy as possible for you to create your best possible meal.

You know success hinges on how well you prepare and combine ingredients. So, you’d want to organize them as efficiently and logically as possible. For Taco Tuesday, you’d group all the Mexican flavors together. For a diner-themed evening, you’d have all your burger ingredients right next to your mustard, ketchup, and french fries.

That would be your culinary command center – and it would work a lot like a Large Language Model (LLM).

How Large Language Models Work: A Dinner Party Analogy

The Ingredients

Every ingredient in your kitchen has a unique flavor, texture, color, and purpose. The broader and more diverse your ingredient list, the more dishes you can create. The more you understand how they relate, the better you can organize them. And you know sourcing locally grown tomatoes will make a better sauce than buying that canned version.

Large language models like OpenAI’s ChatGPT, Microsoft’s Copilot, and Google’s Gemini transform textual data (ingredients) into numbers that encapsulate their ‘flavor,’ ‘texture,’ ‘color,’ and ‘purpose.’ This foundational step enables the model to process and understand the information. And like your fresh tomato, developers are curating the best data sets that represent the nuances of languages and cultures. This ensures that the AI can produce outputs that resonate across various topics, styles, and perspectives.

Taste Testing

As you experiment with ingredient combinations, your culinary intuition sharpens. You’re not just tossing ingredients together; you’re tasting, adjusting, and learning with each dish. What tasted great; what missed the mark? This iterative process is how AI models refine their understanding of language.

Through pattern learning, AI tools analyze information and learn ‘taste’ – which combinations work well, and which don’t. Just like chefs learn from feedback, AI uses a trial, feedback, and adjustment cycle, producing increasingly better results. This is why we’re blown away. Then we see newer results in a few weeks with these iterations, and we’re blown away again.

Mise-En-Place

Mise-en-place, or ‘everything in its place,’ is a chef’s pre-cooking ritual. It’s not just about organizing ingredients; it’s a philosophy that ensures efficiency and readiness. Organizing your kitchen where burgers and french fries are stored together because they complement each other helps you intuitively create dishes on the fly. Large language models like ChatGPT or Gemini use a similar strategy, with words taking on a multidimensional form.

An LLM arranges data in a vast, multidimensional vector space. Imagine this space as an infinitely expansive kitchen, where each ingredient (word or phrase) has its specific storage spot. Its flavor, usage frequency, and compatibility with other ingredients dictate where it goes. Large language models put words like ‘ocean’ and ‘sea’ near ‘water’ because of their contextual and semantic similarities. Organizing data like this is more than a neat trick; it’s essential for the model to efficiently generate language. It allows an LLM to glance at its surroundings and decide which ingredients make the content sound best. This process is how AI creates coherent, engaging, and, often, innovative content.

The Menu

With your kitchen ready and ingredients organized, you’re set to craft a menu tailored to your guests’ preferences. You decide what dishes you’re going to serve based on the ingredients you have and what you know about your guests’ preferences. This is akin to how AI generates text, selecting words (or dishes) based on what has come before and what makes the most sense to come next.

Large language models like ChatGPT, Copilot, and Claude generate text one word at a time. They analyze incomprehensible amounts of data – often billions or even trillions of data points. This process mirrors how a chef plans a meal, ensuring each new course (or word) complements the last.

The Meal

After flavor experimentation, meticulous preparation, and putting everything together, you’re ready to serve. This moment is about understanding that you took something from your head and placed it on a plate. Each dish on the table is a testament to your ability to harmonize diverse ingredients, balance flavors, and cater to the nuances of your guests.

Similarly, in large language models like ChatGPT and Gemini, the final result is the culmination of numerous complex processes. Data encoding, pattern learning, vector space mapping, and next-word prediction all played a role. This output, whether a paragraph or poem, an article or an image, embodies the model’s learned intelligence. It is the end product of a transformation from raw data to coherent, engaging, and meaningful language.

The Last Byte

The evening winds down, and the last plates are cleared. You can now appreciate how a simple idea became a fully realized dining experience. The evolution of LLMs from initial data input to nuanced output mirrors your path from pantry to plate. It is a process of continual learning and adapting. Just as a chef experiments with new recipes and techniques, AI models evolve, learning from interactions and feedback.

So, the next time you interact with AI-generated content, remember the artistry. Realize that the results you see are a blend of science and creativity, ingredients and insights, all coming together to serve up knowledge – one byte at a time.

From Kitchen to Keyboard – Practical Takeaways

Prompt Precision

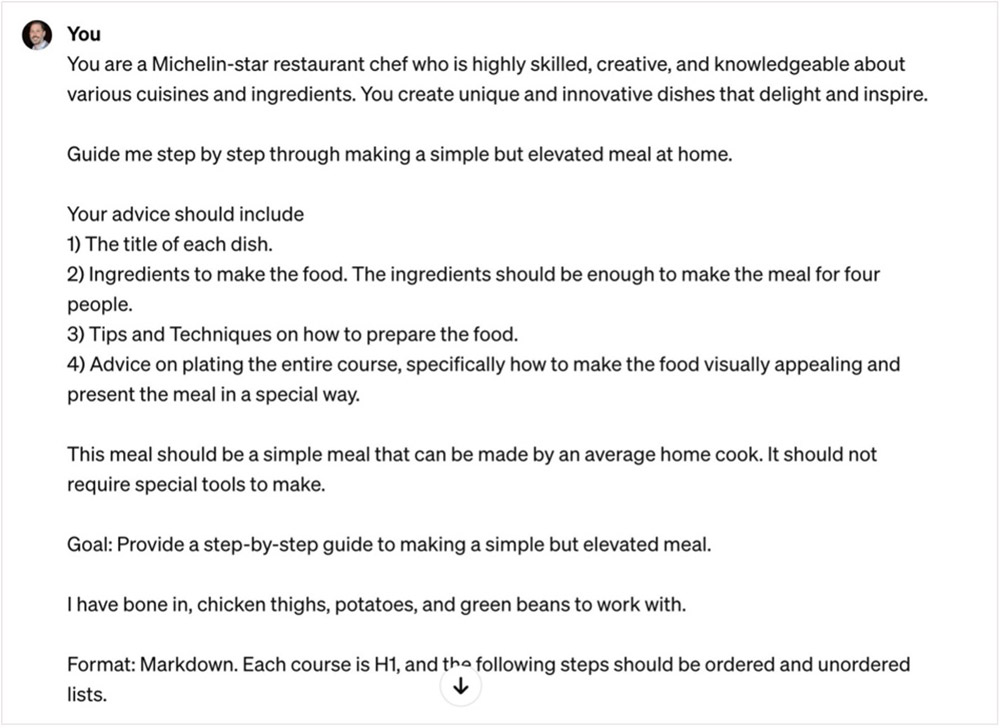

The clarity and specificity of what you tell AI to do significantly influences the quality of the response you get. Your prompt is the recipe you’re giving AI. The more detailed and specific it is, the more likely you are to get the results you want. If you’re seeking advice, guidance, or information, be as explicit as possible. Here’s some expert tips on how to write an effective prompt for ChatGPT, Gemini, Copilot, and other LLMs:

- A specific persona,

- The task you want it to do,

- The exact steps to complete that task,

- Any context or constraints you want it to abide by,

- The goal you’re trying to accomplish,

- And how you want it to format the response.

This precision helps the large language model know exactly what area in its vast pantry of information to home in on, allowing it to serve up exactly what you’re craving.

Prompt Example for ChatGPT

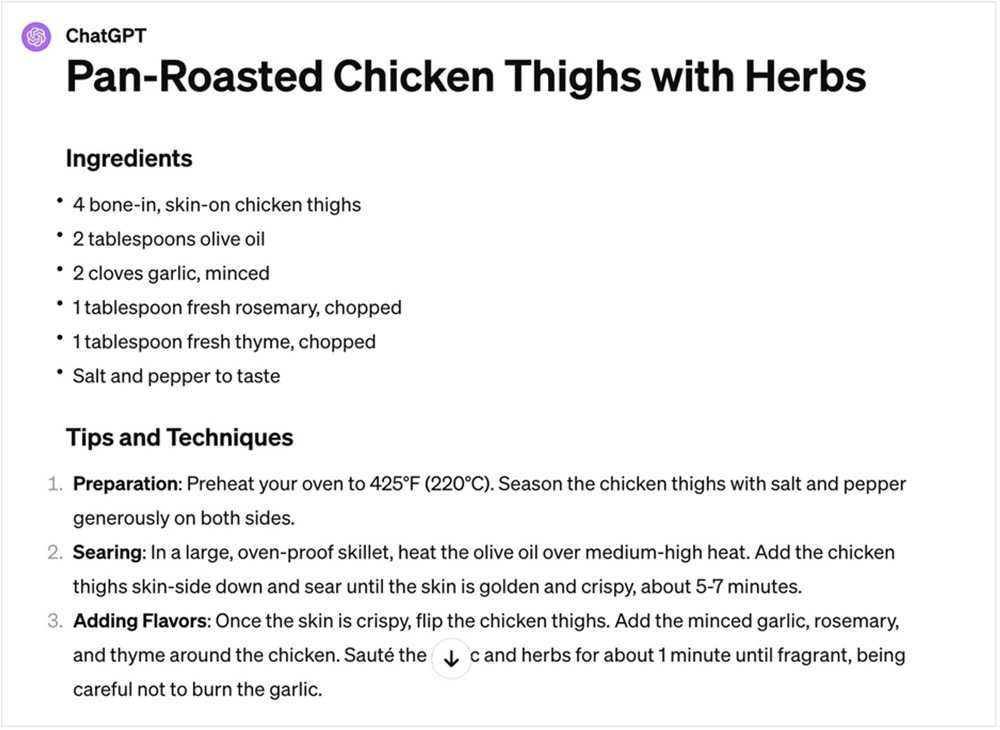

Result Example for ChatGPT

Iterative Engagement

Engaging with AI is an iterative process. Refine your prompts based on the responses you receive. If the first ‘dish’ isn’t to your taste, tweak the ingredients of your recipe. Use and abuse the pen “edit” button. Rephrase for clarity, add more context, or ask follow-up questions based on the initial response. Through this process, you’ll not only get closer to the answers you want but also become more adept at interacting with AI in future interactions.

Organizing Your Tools

Your digital AI workspace is your kitchen. Familiarize yourself with the different tools at your disposal, such as specific AI platforms, their strengths, their weaknesses, and how they can be combined to enhance your productivity or creativity. Whether you’re using AI for writing assistance, data analysis, or creative exploration, knowing what each tool is best suited for allows you to orchestrate them effectively. A chef doesn’t use a whisk when the recipe calls for a spatula. Don’t try to make an AI tool do something it wasn’t intended to do. You’ll just end up with a frustrating experience.

By adopting these strategies, you’re not just interacting with AI; you’re a collaborator in the creative process. You’re mastering your digital kitchen on your way to increased productivity and innovation.

Whether you want to learn more about how large language models work or how to best integrate AI into your business, reach out to connect@doyontechgroup.com today to get started.

––––––

About the Author

Greg Starling serves as the Head of Emerging Technology for Doyon Technology Group. He has been a thought leader for the past twenty years, focusing on technology trends, and has contributed to published articles in Forbes, Wired, Inc., Mashable, and Entrepreneur magazines. He holds multiple patents and has been twice named as Innovator of the Year by the Journal Record. Greg also runs one of the largest AI information communities worldwide.

Doyon Technology Group (DTG), a subsidiary of Doyon, Limited, was established in 2023 in Anchorage, Alaska to manage the Doyon portfolio of technology companies: Arctic Information Technology (Arctic IT®), Arctic IT Government Solutions, and designDATA. DTG companies offer a variety of technology services including managed services, cybersecurity, and professional software implementations and support for cloud business applications.